Back to all articles

Back to all articles

Blogs

Helped a Client Overcome Challenges in Building an AI Chatbot – Part 1

At Nextbridge, we started a journey of developing an AI chatbot designed to help employees access the information from the company’s internal policies and handbook quickly. Our objective was to create an efficient and smooth solution that would enable employees to interact with the company’s resources easily.

This project reflects our commitment to utilizing AI technology and IoT innovations to enhance workplace productivity and employee experience, much like our exploration of why chatbots are so popular. However, like any other AI project, it was exciting as well as challenging. In this article, we share the goal, the process we followed, the tools and technologies we used, and the hurdles we faced in the journey of creating a reliable AI system.

The Goal

We set a simple goal: To create an AI-based chatbot that allows employees to easily access the company’s important policies and handbooks in real-time. This solution had to be responsive, efficient, and able to process several document formats including Word files and PDFs.

The Tech Stack

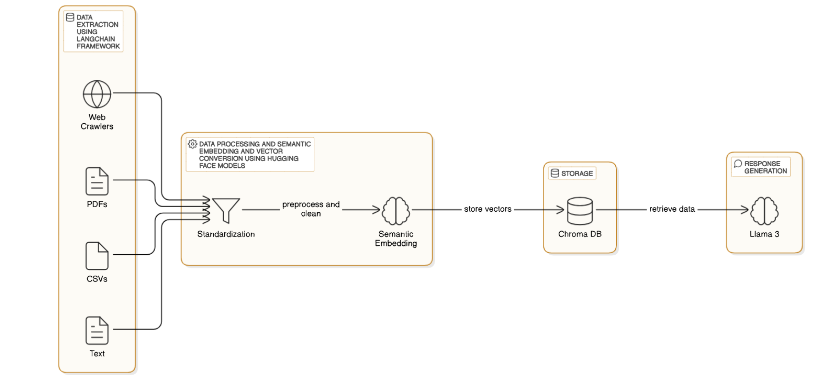

With the above requirements in mind, we laid a foundation for our AI chatbot to ensure high performance, scalability, and accuracy. Here is an overview of key technologies integrated into the system:

Llama Model by Meta AI: We used this model due to its natural language processing capabilities, a cornerstone of modern machine learning workflows. It provided the necessary base to handle complex queries and generate accurate responses.

LangChain: This model was supposed to orchestrate the document processing pipeline, integrating tools for extracting data from various formats, including PDFs and Word files. This ensures the chatbot has the ability to handle different file sources and types of information effectively.

Hugging Face Models: We chose Hugging Face models for text preprocessing, semantic embedding, and vectorization. These models assisted in converting the raw data into a structured format that the Llama model could easily interpret.

ChromaDB: ChromaDB provides the infrastructure to store and manage vector embeddings generated from processed text. We chose this database to efficiently store and retrieve the relevant information whenever needed.

Python/Flask Application: We integrated Llama with a Python/Flask application that handled response generation and user interactions. This is what formed the core of the chatbot’s UI, ensuring a smooth interaction between the employees and the AI.

Key Challenges

While helping our client build an AI chatbot, we faced several technical issues and complexities. These issues were related to the Llama model. We overcame those issues using optimization techniques. Following are the issues we faced and the optimization techniques we utilized:

High-End Resource demand

The Llama model's high resource demands led to frequent Out-of-Memory (OOM) issues (a common hurdle in scaling AI chatbots), as it requires significant memory for both loading and inference. This made efficient memory management a crucial challenge. The response of Llama was also slow and sometimes, it took more than 10 minutes to give results.

Optimization Techniques

To address these limitations, we explored several techniques, including

- Model quantization to reduce memory usage

- Batching to optimize inference performance

- Memory optimization through smart caching strategies

- GPU & CPU offloading to distribute computational load effectively

Alternate Solution

To overcome these challenges, we evaluated alternative models to replace Meta AI's Llama. After thorough research, we selected Deepseek R1 for developing our chatbot due to its efficiency and optimized resource usage.

Stay tuned for our next article, where we’ll share how we integrated Deepseek R1 with various libraries and models to build NextChatbot successfully!

Don't hire us right away

talk to our experts first,

Share your challenges, & then decide if we're the right fit for you! Talk to Us

Partnerships & Recognition

Commitment to excellence